As organizations adopt generative AI use cases, they are confronted with important security and privacy risks, including:

- Governing enterprise use of generative AI to maximize benefits and minimize risks.

- Protecting data confidentiality and integrity when using generative AI systems.

- Responding to a rapidly evolving threat landscape.

Our Advice

Critical Insight

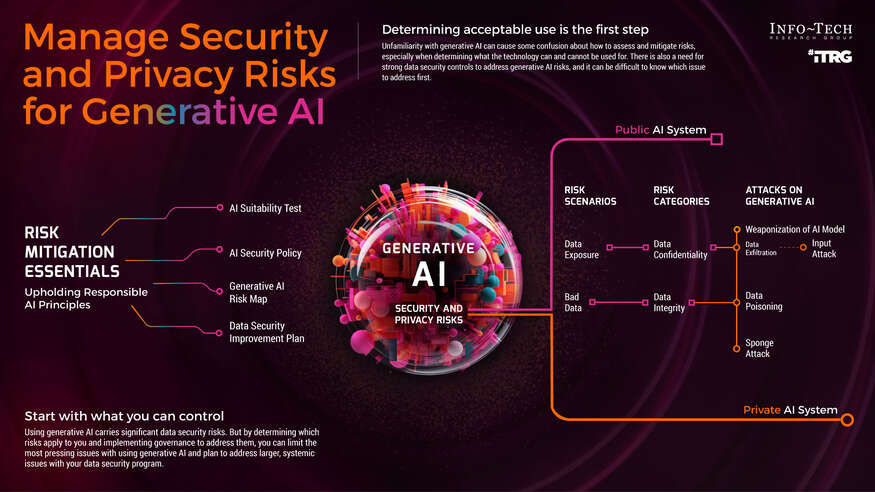

Start with what you can control. Using generative AI involves several difficult data security risks, but by determining which risks apply to you and implementing governance to manage them, you can limit the most pressing issues and plan to address larger, systemic issues with your data security program.

Impact and Result

- Determine which risks apply to your generative AI use cases.

- Draft an AI security policy to address applicable risks.

- Plan to implement necessary improvements to your data security posture.

Member Testimonials

After each Info-Tech experience, we ask our members to quantify the real-time savings, monetary impact, and project improvements our research helped them achieve. See our top member experiences for this blueprint and what our clients have to say.

10.0/10

Overall Impact

$6,499

Average $ Saved

5

Average Days Saved

Client

Experience

Impact

$ Saved

Days Saved

Delta Dental Plan of New Jersey

Guided Implementation

10/10

$6,499

5

The ability for Jon to present his research in a condensed format save me time and research.

Analyst Perspective

Generative AI needs an acceptable use policy

When it comes to using generative AI (Gen AI), the benefits are tangible, but the risks are plentiful, and the tactics to address those risks directly are few.

Most risks associated with Gen AI are data-related, meaning that effective AI security depends on existing maturity elsewhere in your security program. But if your data security controls are a bit lacking, that doesn’t mean you’re out of luck.

The good news is that the greatest and most common risks of using Gen AI can be addressed with an acceptable use policy. This should be top priority when considering how your organization might incorporate Gen AI into its business processes.

Some future-state planning will also help you determine which other parts of your security program require upgrades to further reduce AI-related risks. What exactly must be improved, however, will depend on your specific use case.

|

Logan Rohde

Senior Research Analyst, Security & Privacy Info-Tech Research Group |

Executive Summary

Your Challenge

- Governing enterprise use of Gen AI to maximize benefits and minimize risks

- Protecting data confidentiality and integrity when using Gen AI systems

- Responding to an ever-shifting threat landscape

Organizations seeking to become early adopters of Gen AI may not have security teams presently equipped to mitigate the risks. Some may need to retroactively apply governance if unauthorized AI use is already happening.

Common Obstacles

- Uncertainty assessing risks of new technology

- Difficulty implementing governance

- Immaturity of existing data security controls

Unfamiliarity with Gen AI may create confusion about how to assess and mitigate risks, especially when determining how the technology can be used. Given the additional need for strong data security controls to address Gen AI risks, it can be difficult to know which issue must be addressed first.

Info-Tech’s Approach

- Determine which risks apply to your Gen AI use cases

- Draft an AI security policy to address those risks

- Plan to address necessary improvements to data security posture

In most cases, Gen AI presents novel versions of familiar data security risks, meaning that most organizations only need to improve or expand existing controls rather than create new ones.

Info-Tech Insight

Start with what you can control. Using Gen AI carries significant data security risks. By determining which risks apply to you and implementing governance to address them, you can limit the most pressing issues with using Gen AI and plan to address larger, systemic issues with your data security program.

Your challenge

This research is designed to help organizations who need to:

- Evaluate risks associated with enterprise use of Gen AI.

- Assess suitability of existing security controls to mitigate risks associated with Gen AI.

- Communicate risks to the business and end users.

- Determine acceptable use criteria for Gen AI.

Implement Gen AI governance now – even if you don’t plan to use it. Without an official policy, end users won’t know the organization’s stance. Moreover, the technology can make it easier for bad actors to execute various types of cyberattacks, and such risks should be communicated throughout the organization.

IT leaders who believe their organization is not presently equipped to leverage Gen AI: 99% (Source: Salesforce, 2023)

Common obstacles

These barriers make this challenge difficult to address for many organizations:

- The novelty of Gen AI leaves many security and IT leaders unsure about how to evaluate the associated risks.

- What many miss about these risks, however, is that most are new versions of familiar data security risks that can be mitigated by defining acceptable use and necessary security controls to support governance of Gen AI.

- Assessing risk and defining acceptable use are the first key steps to Gen AI security improvement. Organizations must also re-evaluate their data security controls and plan necessary improvements to further mitigate risks associated with enterprise use of Gen AI.

Don’t fall behind on Gen AI risk management

6% — Organizations with a dedicated risk assessment team for Gen AI (Source: KPMG, 2023)

71% — IT leaders who believe Gen AI will introduce new data security risks (Source: KPMG, 2023)

Key risk types for Gen AI

Data security and privacy

- The greatest risk associated with using Gen AI is a loss of data confidentiality and integrity from inputting sensitive data into the AI system or using unverified outputs from it.

Data confidentiality

- Care must be taken when choosing whether to enter a given data type into an AI system. This is especially true in a publicly available system, which is likely to incorporate that information into its training data.

- Problems may still arise in a private model, particularly if the AI model is trained using personal identifiable information (PII) or personal health information (PHI), as such information may appear in a Gen AI output.

Data integrity

- Data integrity risk comes from repeatedly using unverified Gen AI outputs. A single output with faulty data may not cause much trouble, but if these low-quality outputs are added to databases, they may compromise the integrity of your records over time.

Top-of-mind Gen AI concerns for IT leaders

- Cybersecurity — 81%

- Privacy — 78%

(Source: KPMG, 2023)

AI model versus AI system

AI model

An algorithm used to interpret, assess, and respond to data sets, based on the training it has received.

AI system

The infrastructure that uses the AI model to produce an output based on interpretations and decisions made by the algorithm.

(Sources: TechTarget, 2023; NIST, 2023)

Info-Tech Insight

The terms AI model and AI system are sometimes used interchangeably, but they refer to two closely related things. In many cases, the tactics to secure Gen AI will overlap, but sometimes additional security controls may be required for either the model or the system.

Public versus private AI

Public AI system

- Uses a publicly available AI system that benefits from multiple users worldwide entering data that can be used to further train the AI model.

- Carries significant risk of accidental data exposure and low-quality outputs compromising data integrity.

- Risk of attack on the AI system is owned by the vendor rather than the user.

Private AI system

- Private system used only within the organization that owns it.

- Data confidentiality and privacy risks are fewer, but still exist (e.g. PII used in training data).

- Outputs should be verified for quality before being used in business processes to prevent data integrity issues.

- Owner of the system assumes the attack risks.

Attacks on Gen AI

Input attacks

- Using knowledge of how the AI model has been trained, an input is entered that causes it to malfunction (e.g. misinterpret a risk as something benign).

- Often preceded by data exfiltration attack to learn how model works or what data it has been trained with.

- Data confidentiality should always be protected.

Data poisoning

- Training data is tampered with to corrupt AI model integrity.

- AI system data should be audited regularly to ensure data integrity has not been compromised.

- Data resiliency best practices should be followed (e.g. backups and recovery time objective [RTO] and recovery point objective [RPO] testing).

“Because few developers of machine learning models and AI systems focus on adversarial attacks and using red teams to test their designs, finding ways to cause AI/ML systems to fail is fairly easy.” – Robert Lemos, Technology Journalist and Researcher, Lemos Associates LLC, in Dark Reading

Attacks on Gen AI

Weaponization of AI model

- The AI system is compromised via malicious code used to distribute it throughout the organization (e.g. ransomware attack).

- Access to knowledge of AI model, system, and training data should be on a need-to-know basis.

- Unverified code should not be incorporated into AI system.

Sponging

- A series of difficult-to-process inputs are entered into the AI model to slow down its processing speed and increase energy consumption (similar to denial-of-service [DoS] attack).

- The AI system should be designed with a failure threshold to prevent excessive energy consumption.

AI-assisted cyberattacks

Regardless of whether an AI system is public or private, we must all contend with the risk that someone else will use Gen AI to facilitate a familiar cyberattack.

Phishing

- Gen AI can be used to create convincing phishing emails, not just with text, but with https://dj5l3kginpy6f.cloudfront.net/blueprints/generative-AI-security-and-privacy, sound, and video (i.e. deepfake).

Malware

- Gen AI chatbots are programmed not to help people carry out illegal activities. However, the way the question is asked can influence the chatbot’s willingness to comply.

“AI generation provides a novel extension of the entire attack surface, introducing new attack vectors that hackers may exploit. Through generative AI, attackers may generate new and complex types of malware, phishing schemes and other cyber dangers that can avoid conventional protection measures.” – Terrance Jackson, Chief Security Advisor, Microsoft, in "Exploring," Forbes, 2023

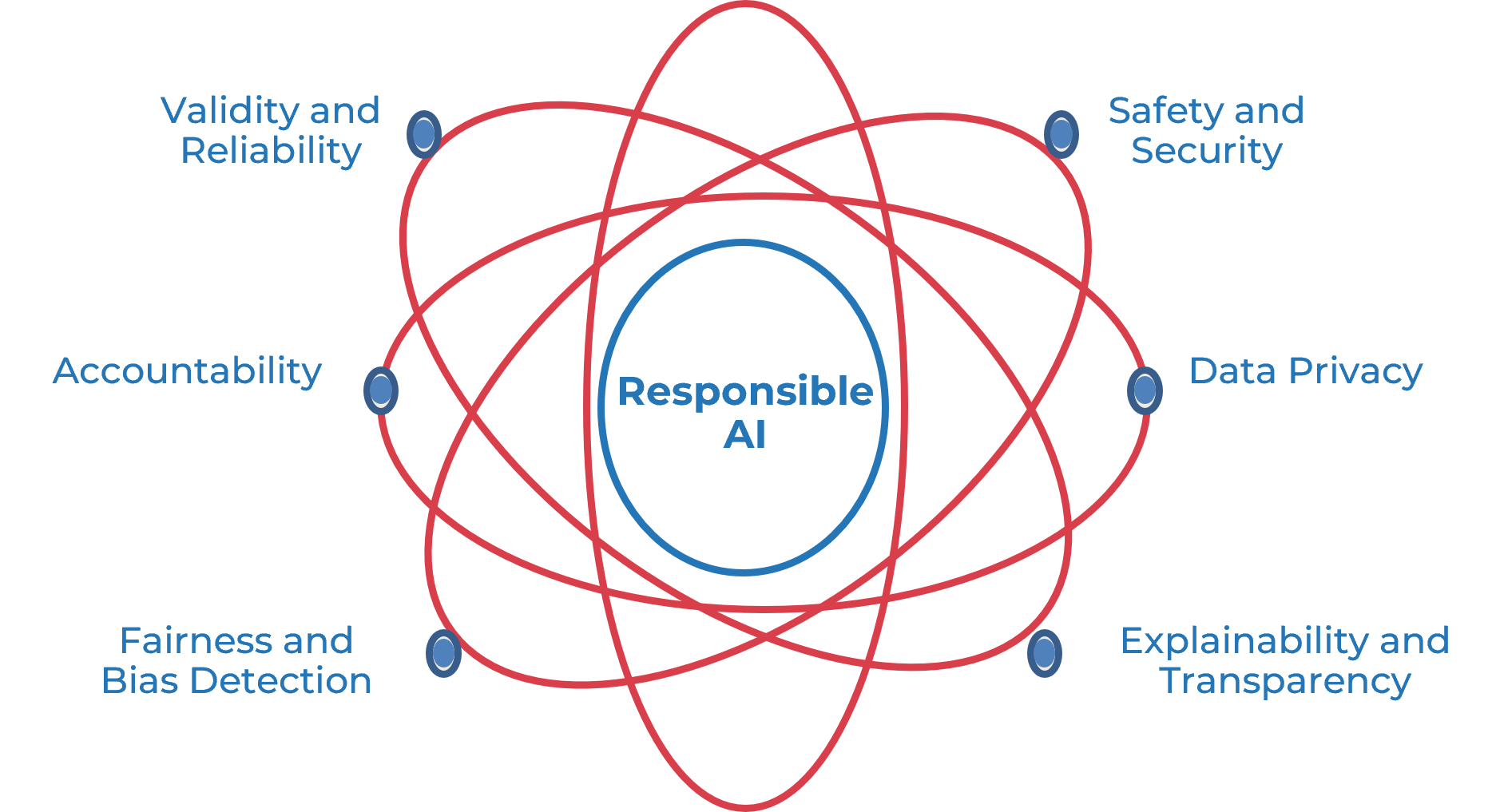

Guiding principles of responsible AI

Principle #1 – Privacy

Individual data privacy must be respected.

- Do you understand the organization’s privacy obligations?

Principle #2 – Fairness and Bias Detection

Unbiased data will be used to produce fair predictions.

- Are the uses of the application represented in your testing data?

Principle #3 – Explainability and Transparency

Decisions or predictions should be explainable.

- Can you communicate how the model behaves in nontechnical terms?

Principle #4 – Safety and Security

The system needs to be secure, safe to use, and robust.

- Are there unintended consequences to others?

Principle #5 – Validity and Reliability

Monitoring of the data and the model needs to be planned.

- How will the model’s performance be maintained?

Principle #6 – Accountability

A person or organization must take responsibility for any decisions that are made using the model.

- Has a risk assessment been performed?

Principle #n – Custom

Add principles that address compliance or are customized for the organization/industry.

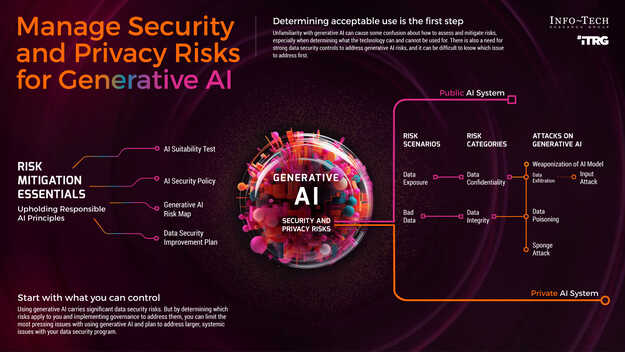

Gen AI essentials

1. AI suitability test

Before committing to Gen AI deployment, make sure the benefits outweigh the risks and that there is a specific advantage to using Gen AI as part of a business process.2. Gen AI risk mapping

Risks will emerge depending on use and therefore will vary somewhat between organizations. Determining which ones apply to you will affect how you govern Gen AI use.3. Gen AI security policy

A policy detailing required security protocols and acceptable use for Gen AI is the most immediate step all organizations must take to deploy Gen AI securely.4. Data security improvement plan

Enterprise use of Gen AI carries significant risks to data security. If any current controls are insufficient to account for Gen AI risks, a plan should be in place to close those gaps.

Determining acceptable use is the first step

Start with what you can control

Using Gen AI carries significant data security risks. By determining which risks apply to you and implementing governance to address them, you can limit the most pressing issues with using Gen AI and plan to address larger, systemic issues with your data security program.

Look for problems before getting invested

While Gen AI opens many possibilities, some risks will be difficult to address. For example, if your proposed use case requires sensitive data to be entered into a public AI system to produce an output for use in your supply chain, it will be virtually impossible to mitigate such risks effectively.

Build a strong perimeter

AI security is still in its early stages and best practices are still being determined. Until more specific controls and techniques are developed, the best course of action is to use a robust data security program to make your sensitive data as difficult to access as possible, and to monitor for intrusions.

Risk likelihood just went up

The use of Gen AI to facilitate cyberattacks doesn’t fundamentally change the nature of the risk. But because Gen AI makes the process easier, we should account for this in our risk assessments.

Watch for overlap

There will usually be both an input and an output component when using Gen AI, which means both risk factors are present, but one may be dominant. Therefore, both inputs and outputs should receive sign-off before use to limit data confidentiality and integrity risks.

Blueprint deliverables

Each step of this blueprint is accompanied by supporting deliverables to help you accomplish your goals.

Key deliverable:

AI Security Policy Template

Set standards for data confidentiality and integrity, acceptable use, and technical IT controls.

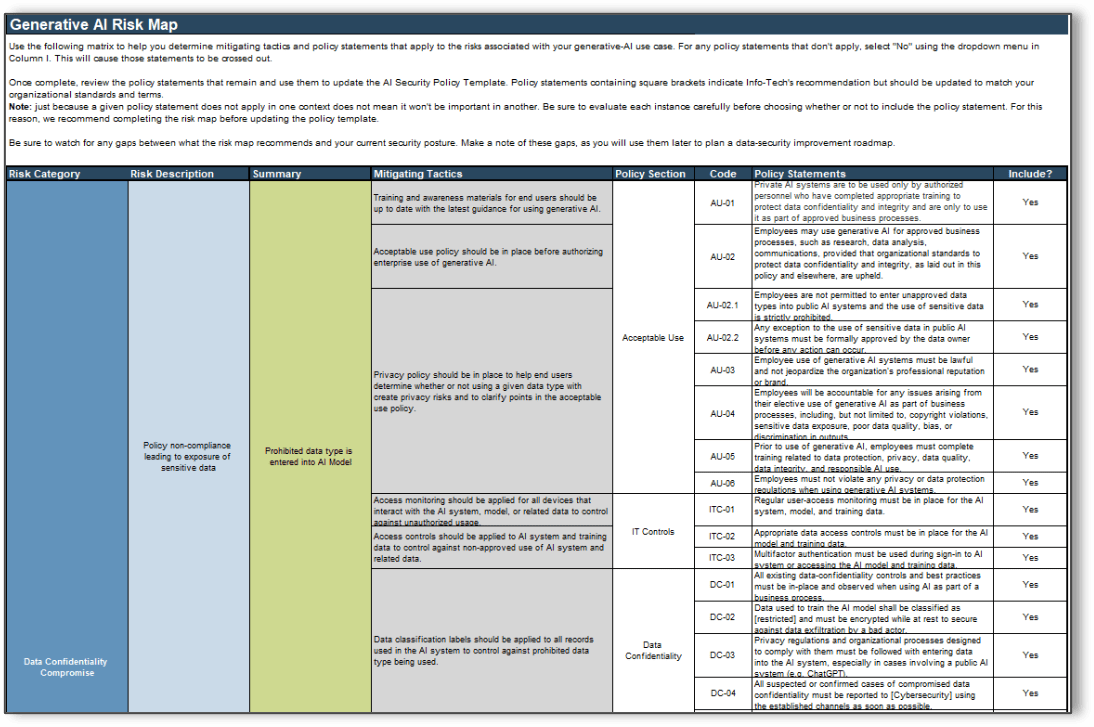

Generative AI Risk Map

Determine the risks associated with your Gen AI use case and the applicable policy statements

Blueprint benefits

IT Benefits

- Improved understanding of AI-related risks and how they apply to your use cases

- Lower risks associated with near-term use of Gen AI by setting acceptable use standards

- Long-term risks associated with AI addressed via long-term security planning

- Reduced data loss incidents related to use of public Gen AI systems

Business Benefits

- Increased productivity via Gen AI

- Lower regulatory risks related to the use of Gen AI

- Defined standards for how AI can be used, avoiding additional legal risks related to copyright infringement

Measure the value of this blueprint

Expedite your policy and lower risk

| Work to complete | Average time to complete | Info-Tech method | Time saved |

| Write Gen AI security policy | 8 days – research risks, determine requirements, interview stakeholders, draft and revise policy | 0.5 days | 7.5 days |

| Improvement metrics | Outcome |

| Reduced risk of data confidentiality compromise | 50% reduction of risk over a one-year period |

| Reduced risk of data integrity compromise | |

| Reduced risk of input attack | |

| Reduced risk of data poisoning | |

| Reduced risk of sponge attack |

IT leaders prioritizing Gen AI in the next 18 months: 67%

(Source: Salesforce, 2023)

Determine Gen AI suitability

Some things are better done the old-fashioned way

Before determining the specific security and privacy risks associated with your desired use for Gen AI (and how to address them), consider whether AI is the best way to achieve your goals.

Info-Tech’s AI Suitability Test

- What are the benefits of using Gen AI for this purpose?

- Does the intended purpose involve entering sensitive data into the AI system?

- Does the intended purpose incorporate Gen AI outputs into business processes or the supply chain?

- How severe would the impact be if sensitive data were exposed?

- How severe would the impact be if a faulty output were used?

- Will a public or private AI system be used?

- What alternatives exist to achieve the same goal and what drawbacks do they have?

- Considering your answers to the above questions, how suitable is AI for the proposed purpose?

(Sources: Belfer Center, 2019; “Data Privacy,” Forbes, 2023)

“[T]he outcomes of ... AI suitability tests need not be binary. They can ... suggest a target level of AI reliance on the spectrum between full autonomy and full human control. ” – Marcus Comiter, Capability Delivery Directorate at DoD Joint Artificial Intelligence Center

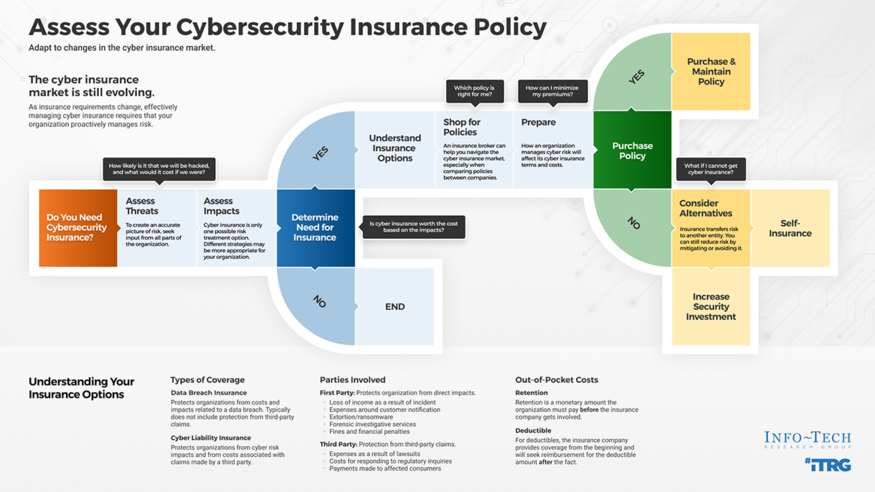

Assess Your Cybersecurity Insurance Policy

Assess Your Cybersecurity Insurance Policy

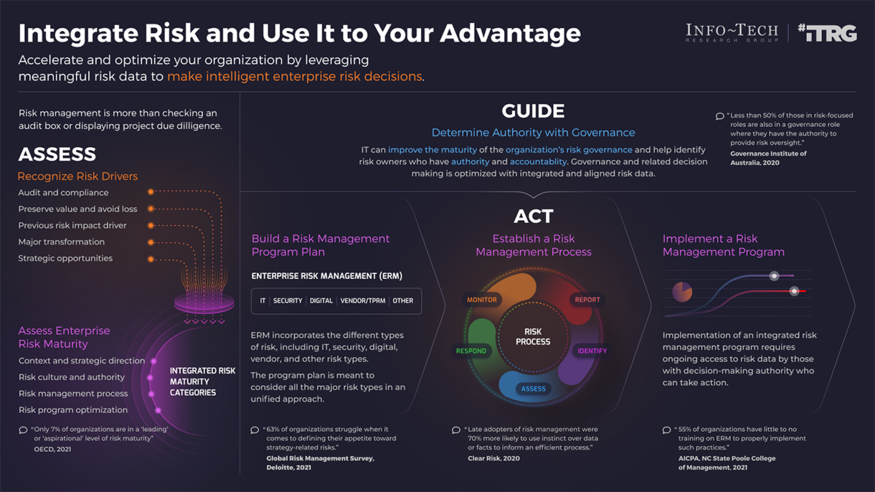

Achieve Digital Resilience by Managing Digital Risk

Achieve Digital Resilience by Managing Digital Risk

Combine Security Risk Management Components Into One Program

Combine Security Risk Management Components Into One Program

Prevent Data Loss Across Cloud and Hybrid Environments

Prevent Data Loss Across Cloud and Hybrid Environments

Build an IT Risk Management Program

Build an IT Risk Management Program

Close the InfoSec Skills Gap: Develop a Technical Skills Sourcing Plan

Close the InfoSec Skills Gap: Develop a Technical Skills Sourcing Plan

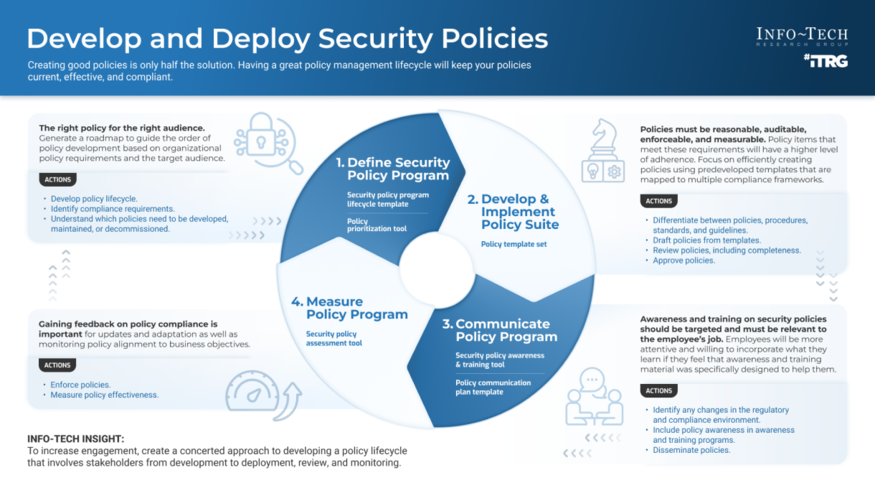

Develop and Deploy Security Policies

Develop and Deploy Security Policies

Fast Track Your GDPR Compliance Efforts

Fast Track Your GDPR Compliance Efforts

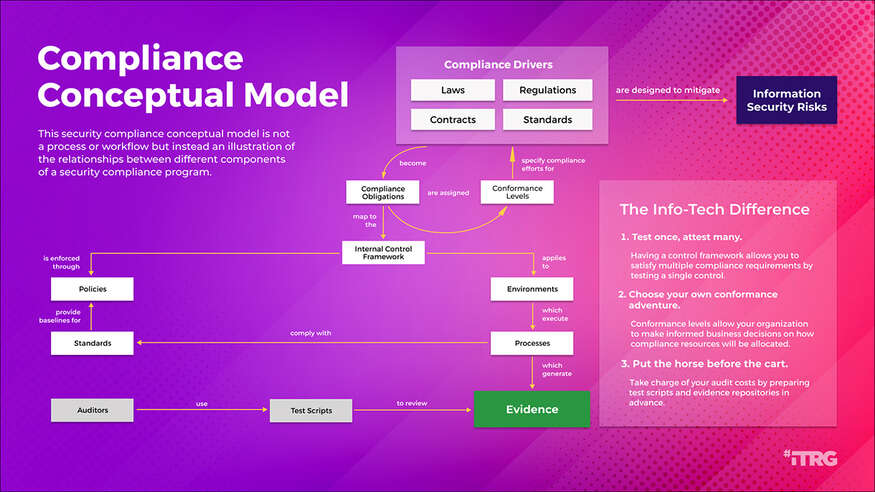

Build a Security Compliance Program

Build a Security Compliance Program

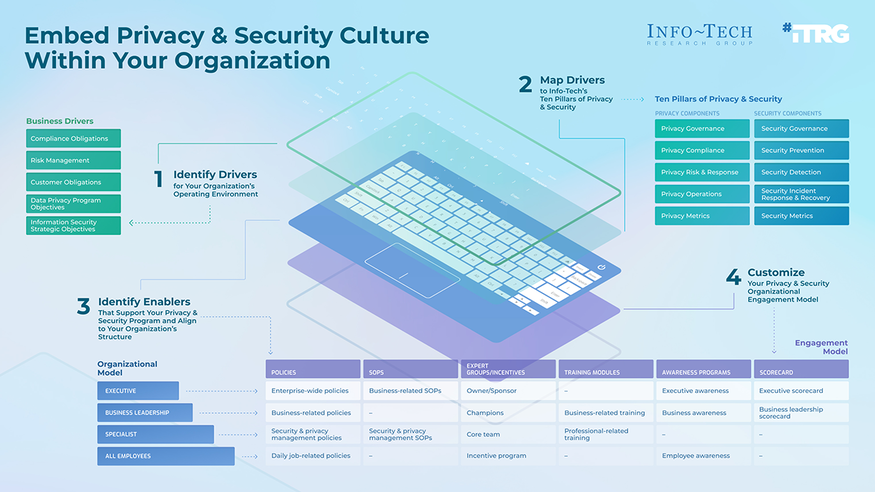

Embed Privacy and Security Culture Within Your Organization

Embed Privacy and Security Culture Within Your Organization

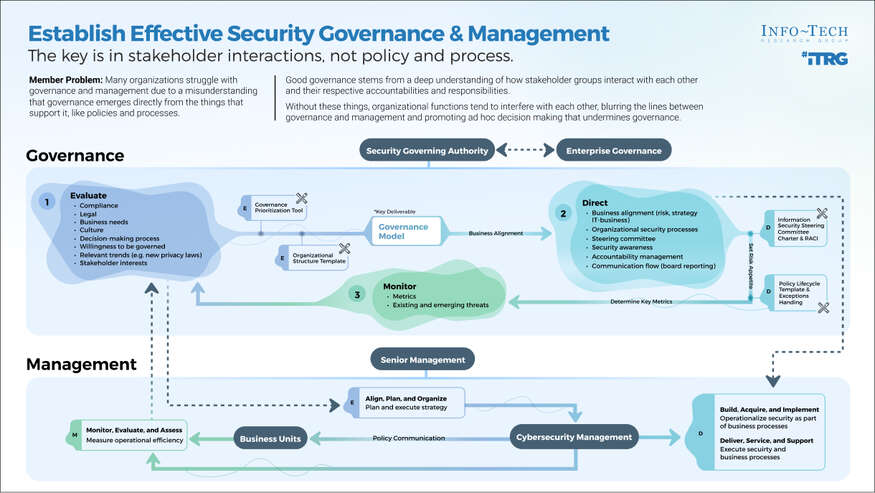

Establish Effective Security Governance & Management

Establish Effective Security Governance & Management

Improve Security Governance With a Security Steering Committee

Improve Security Governance With a Security Steering Committee

Develop Necessary Documentation for GDPR Compliance

Develop Necessary Documentation for GDPR Compliance

Reduce and Manage Your Organization’s Insider Threat Risk

Reduce and Manage Your Organization’s Insider Threat Risk

Satisfy Customer Requirements for Information Security

Satisfy Customer Requirements for Information Security

Responsibly Resume IT Operations in the Office

Responsibly Resume IT Operations in the Office

Master M&A Cybersecurity Due Diligence

Master M&A Cybersecurity Due Diligence

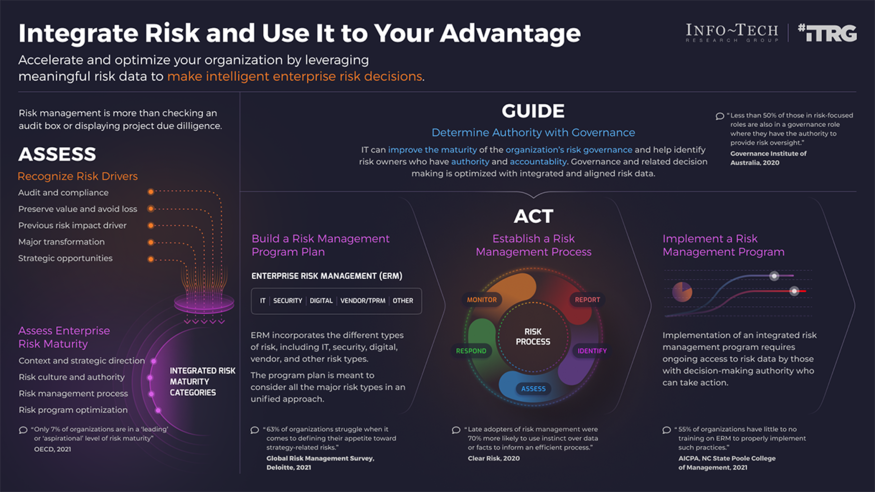

Integrate IT Risk Into Enterprise Risk

Integrate IT Risk Into Enterprise Risk

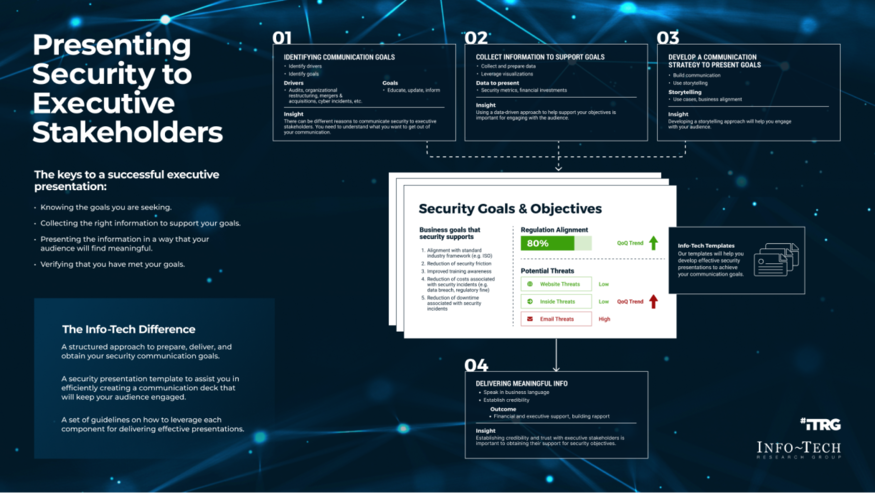

Present Security to Executive Stakeholders

Present Security to Executive Stakeholders

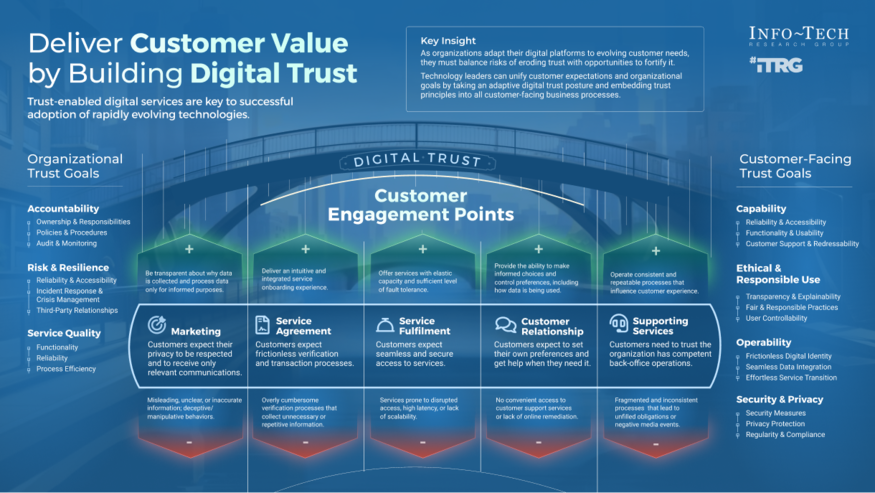

Deliver Customer Value by Building Digital Trust

Deliver Customer Value by Building Digital Trust

Address Security and Privacy Risks for Generative AI

Address Security and Privacy Risks for Generative AI

Protect Your Organization's Online Reputation

Protect Your Organization's Online Reputation